Okay, finally it is up. I'm talking about Sguil Demo Server. This demo server is running sguil CVS, I'm putting this up so that everyone can check out the latest features that are in development cycle.

The demo server detail is shown below:

Server: nsm.kicks-ass.org

Port: 7734

Username: sguil

Password: leave it blank

Since this is just for anonymous login, thus I didn't put up the password. I have very poor internet link(thanks to my wonderful ISP) so if any of you have problem or delay in connecting to demo server, please be patient. By the way, you may need to use sguil client in CVS as well in order to conenct to the demo server. If you are on *nixes platform, just run -

shell>cvs -d:pserver:anonymous@sguil.cvs.sourceforge.net:/cvsroot/sguil checkout sguil

You don't need to install anything else if you already have all the dependencies for sguil client installed before, just run sguil.tk under client directory will do. If you are using Ubuntu Linux, here's how-to get sguil client installed in painless way. Have fun.

Enjoy (;])

Wednesday, January 31, 2007

Tuesday, January 30, 2007

PgOSS Meetup Cancel

I'm sorry to announce that the event has to be cancelled due to it falls on second day of Thaipusam and many people unable to attend the meetup, we will try to make it as soon as possible and hopefully everyone can attend the meetup soon.

If you have posted the event in the mailing lists or anywhere, please do inform them the suspension of the event. Thanks.

If you have posted the event in the mailing lists or anywhere, please do inform them the suspension of the event. Thanks.

Peace :]

Sunday, January 28, 2007

PgOSS 2nd Meetup

After long delay, we finally get to arrange and organize the second Penang Open Source Software Meetup. There will be 2 presentations in the meetup and teh tarik session after meetup as usual. The meetup details as below -

Date: 2007 Feb 2nd

Time: 7:45pm - 9:15pm

Venue: University Science Malaysia(Penang)

Presentation Topics:

- OSS General(geek00L)

- Open Source Web Development with Grails(Sey)

By the way, we will also discuss about the security meetup after long gone of mydefcon. If you are staying nearby, feel free to join us.

Enjoy and Cheers ;]

Tuesday, January 23, 2007

TcpXtract - 3gp

This is considered the 3rd part of my write up in tcpxtract series, I have previously written two posts about it. I never think of writing that much about tcpxtract(this tool is kinda buggy), but it is really useful especially when you need to extract certain type of files.

Last week I was told about 3gp media file format by mypapit, 3gp is 3rd generation new mobile phone video standard format. Most of mobile handphone nowadays ship with 3GPP and 3GPP2 content capture and playback capabilities. Thus most of the pr0n video clips are distributed in 3gp format from server to client end(mobile phone users). I don't really know much about it as I'm still using old handphone.

Thanks to mypapit again as he pointed me out where to get the 3gp files so that I can have chance to look at the meta header in the file and writing the tcpXtract sig for it. I have logged the network traffic with tcpdump when downloading the 3gp file.If you are administering many servers, I don't think you would like to dig out 3gp files on all the servers as it is much of works, it's better to detect and identify it at network level so that you can easily eliminate it.

One of good resource when writing file signature that I found is -

Last week I was told about 3gp media file format by mypapit, 3gp is 3rd generation new mobile phone video standard format. Most of mobile handphone nowadays ship with 3GPP and 3GPP2 content capture and playback capabilities. Thus most of the pr0n video clips are distributed in 3gp format from server to client end(mobile phone users). I don't really know much about it as I'm still using old handphone.

Thanks to mypapit again as he pointed me out where to get the 3gp files so that I can have chance to look at the meta header in the file and writing the tcpXtract sig for it. I have logged the network traffic with tcpdump when downloading the 3gp file.If you are administering many servers, I don't think you would like to dig out 3gp files on all the servers as it is much of works, it's better to detect and identify it at network level so that you can easily eliminate it.

One of good resource when writing file signature that I found is -

http://filext.com/detaillist.php?extdetail=3gp&Search=Search

However the identified header that given are too common in the packet payload, I don't think 00 00 00 at the beginning offset makes a good signature. I need to dig more to write more accurate signature for 3gp. I have downloaded the file and renamed it to pr0n.3gp.

shell>file pr0n.3gp

pr0n.3gp: ISO Media, MPEG v4 system, 3GPP (H.263/AMR)

Then I examined it's data in hex|ascii format and idenfitied the fix strings - ftype3gp4, in fact it comes after 00 00 00 and the 4th dynamic byte. Before writing the sig, I tested it with ngrep -

shell>ngrep -i -I 3gp.pcap -t 'ftyp3gp4'

input: 3gp.pcap

match: ftyp3gp4

.....

shell>ngrep -I 3gp.pcap -xX '0x6674797033677034'

input: 3gp.pcap

match: 0x6674797033677034

.....

Now it should be pretty accurate, the 4th byte offset is dynamic when I examined 3gp file variants. Thus I have the sig added to tcpxtract.conf as below -

3gp(1000000, \x00\x00\x00\?\x66\x74\x79\x70\x33\x67\x70\x34);

I got syntax error when I added this signatures, it appears that the file type(extension) can't be specified with numeric but alphabets(pretty odd and buggy). Thus I have to change it to tgp instead of 3gp for the sake of it.

tgp(1000000, \x00\x00\x00\?\x66\x74\x79\x70\x33\x67\x70\x34);

Then I executed -

shell>mkdir 3gp-extract

shell>tcpxtract -f 3gp.pcap -o 3gp-extract

Examined the file that has been extracted -

shell>file 3gp-extract/00000000.tgp

3gp-extract/00000000.tgp: ISO Media, MPEG v4 system, 3GPP (H.263/AMR)

Now I already got the signature right, move on. Sorry guys, no phone pr0n for you next time!

Cheers (:P)

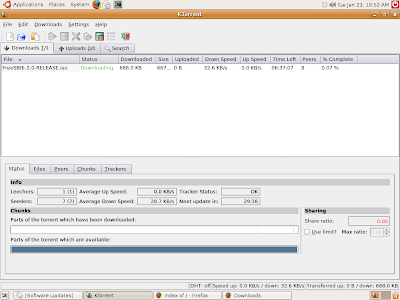

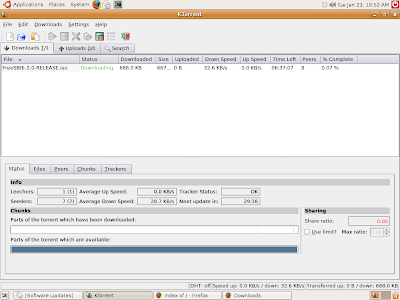

Bit torrent Clients

I remember I have shown some user applications that I used daily, so here's the new post about one of applications I use on my desktop(not workstation).

Which bit torrent client do you use? I have been asked by many people and seeing this question been asked in various forum. When comes to Windows OS platform, people tend to use either utorrent or bitcomet. So what do we have in oss arsenal?

All I hear are azureus or bit tornado.

I don't really like any of the above, in fact I use ktorrent.

I know user base application is always about preferences, I myself love applications that are simple, slick and with clean ui design. The other best features about ktorrent are it supports encryption and doesn't consume much of your CPU or MEM resources.

Here are the Ktorrent screemshots -

In fact when I checked out all the peers that are connected, it seems that none of them are using ktorrent but other popular torrent clients. Try out ktorrent, I bet you will love it.

Enjoy :]

Which bit torrent client do you use? I have been asked by many people and seeing this question been asked in various forum. When comes to Windows OS platform, people tend to use either utorrent or bitcomet. So what do we have in oss arsenal?

All I hear are azureus or bit tornado.

I don't really like any of the above, in fact I use ktorrent.

I know user base application is always about preferences, I myself love applications that are simple, slick and with clean ui design. The other best features about ktorrent are it supports encryption and doesn't consume much of your CPU or MEM resources.

Here are the Ktorrent screemshots -

In fact when I checked out all the peers that are connected, it seems that none of them are using ktorrent but other popular torrent clients. Try out ktorrent, I bet you will love it.

Enjoy :]

Friday, January 19, 2007

Helix: Mounting ufs2

I have one of my machine lying around and it was installed with FreeBSD previously. I need to copy everything out from the hard drive. I decide to use helix again as it is pretty easy and the data can be transfered over the network without much configurations.

Once I login, I launched the terminal and tried to mount the ufs file system. The hard drive is connected with external ide usb connector. Therefore the drive appeared to be sd*. As usual, I run this -

Once I login, I launched the terminal and tried to mount the ufs file system. The hard drive is connected with external ide usb connector. Therefore the drive appeared to be sd*. As usual, I run this -

shell>mount -t ufs /dev/sda1 /media/sda1

I get an error wrong file type, but I remember I installed it with ufs2 which is default file system that used by FreeBSD. After messing with the man page. I figured as such -

shell>mount -t ufs -o ufstype=ufs2 /dev/sda1 /media/sda1

Now I just enabled the ssh server, and everything can be transfer over network either using scp or just netcat will do.

Enjoy ;]

Wednesday, January 17, 2007

FreeBSD 6.2 Released

I just got this toy, it's pretty cool as it can be resized when you want it. So i'm still looking for another toy, most probably puffy but hardly find a good one. Now RedDevil is alone and he needs his buddy - puffy to totally match my blog title. So what's new? Yes, FreeBSD 6.2 R is finally here, check out the download mirrors and pick up any that do well for you.

http://mirrorlist.freebsd.org/FBSDsites.php

To navigate what's new and all the changes, you can find the release note at

http://www.freebsd.org/releases/6.2R/relnotes-i386.html

I'm looking at this interesting feature that added to this release -

The enc(4) IPsec filtering pseudo-device has been added. It allows firewall packages using the pfil(9) framework to examine (and filter) IPsec traffic before outbound encryption and after inbound decryption.

This is pretty neat when one need to snoop on vpn connection that provides visible view for monitoring devices.

Time to upgrade when possible!!!!!

Enjoy ;]

Thursday, January 11, 2007

Santy or s8 - the analysis process

While chating with fellows in freenode #snort-gui, David raised the interesting s8 probes that targetting web servers. As I'm seeing those probes as well, I would like to figure out what is happening in the network and why there are running in the wild.

After discussed with David, I tried not to dive into the network data but googling, however google doesn't return much useful information regarding the probes. In fact you may not know what I'm talking about regarding s8 myth, here are some entries -

After discussed with David, I tried not to dive into the network data but googling, however google doesn't return much useful information regarding the probes. In fact you may not know what I'm talking about regarding s8 myth, here are some entries -

1168444804.073301 %252740 GET /s8Region.asp

1168444830.868371 %252837 GET /s8qq.txt (404 "Not Found" [394] blablalo.com)

1168444909.394569 %253078 GET /s8qq.txt (404 "Not Found" [387] blablalo.com)

1168444992.374820 %253366 GET /eWebEditor/db/s8ewebeditor.mdb (404 "Not Found" [412] blablalo.com)

1168452273.423501 %275283 GET /s8qq.txt (404 "Not Found" [389] forum.blablalo.net)

1168453495.280041 %278412 GET /s8showerr.asp?BoardID=0&ErrCodes=54&action= script JavaScript:alert(document.coo

kie); /script ( span style="font-weight: bold;" 404 /span "Not Found" [390] blablalo.org)

1168453512.055104 %278412 GET /s8flash/downfile.asp?url=jackie/../../conn.asp (404 "Not Found" [397] blablalo.org)

I have renamed all the hostname to blablalo and I think it doesn't hurt. So it is all about http get requests to the file with s8 prefix. Since I have no clue at all as those requests are unsuccessful - 404 and I don't have the files it requested, thus I tried to figure out what kind of domains they are targetting. After some info gathering, apparently all the sites that crawled by s8 requests are powered by discuz content management system. It seems discuz is very popular in China and it offers both asp and php based solutions.

To analyze further, I decided to go through the web server logs by looking for the s8 strings. Then extract all the IPs and performing whois to help me in doing correlations. Interestingly it helps me to conclude my finding. Below are the commands I executed when inside apache logs directory -

shell>for i in `egrep -i '/s8[a-z0-9]*\.asp' * \

| awk '{ print $1 }' | cut -f 2 -d : | uniq`; \

do jwhois $i >> s8whois.log; done

I navigated the results in s8whois.log and found that almost all source IPs are from China. Then I came to realize why google doesn't show up much results with their caches even regarding the s8 probes. Check out the link below -

http://www.baidu.com/s?wd=powered+by+discuz&cl=3

Baidu.com is the most popular search engine in China and I suspected that they are actually performing something similar to this but utilize their own popular search engine to query targeted vulnerable cms.

In fact the automated scripts that running to look for all the malicious scripts that been uploaded to vulnerable sites that powered by discuz should perform operating system or web server fingerprinting(latter easier to be done and more accurate) with better search engine query strings before performing http request on malicious scripts to remain unseen in unix based server.

I guess China system administrators(or whoever) use discuz cms have more headaches than us -

In fact the automated scripts that running to look for all the malicious scripts that been uploaded to vulnerable sites that powered by discuz should perform operating system or web server fingerprinting(latter easier to be done and more accurate) with better search engine query strings before performing http request on malicious scripts to remain unseen in unix based server.

I guess China system administrators(or whoever) use discuz cms have more headaches than us -

http://www.discuz.net/thread-433875-3-1.html

All for now, Peace (;])

Temp solution: Blocking user agent - InetURL:/1.0 via mod_security, all of the requests are carried by it that is pretty identical.

1.2.3.4 - - [08/Jan/2007:17:30:05 +0800] "GET /s8servu.aspx HTTP/1.1" 404

- "-" "InetURL:/1.0"

1.2.3.4 - - [08/Jan/2007:17:30:05 +0800] "GET /s8servu.aspx HTTP/1.1" 404

- "-" "InetURL:/1.0"

Wednesday, January 10, 2007

Wireless traffic analysis - the 802.11

I rarely find any online resources regarding wireless traffic analysis. Then I came through this book that given very good kickstart on performing wireless traffic analysis. I would not much into confirming whether the book has no errors technically until I read this book which is recommended.

Yesterday I found two useful links regarding wireless network. One is at security focus and another one at uninformed. Both are interesting reads and I think it helps analyst to improve themselves when performing wireless traffics analysis.

If you know any good resources about wireless networks, feel free to comment.

Yesterday I found two useful links regarding wireless network. One is at security focus and another one at uninformed. Both are interesting reads and I think it helps analyst to improve themselves when performing wireless traffics analysis.

If you know any good resources about wireless networks, feel free to comment.

~ Monitor the AIR(WNSM) huh ~

Cheers :]

Monday, January 08, 2007

Blog tagged

My blog is tagged, becoming victim is not so bad sometimes.

Somehow people don't know me enough, 5 things I need to clarify here -

1. I'm neither from military or education lines, either me or my teachers totally suck when I was in school. In fact I'm self-learner, I learn most of the things from google and yes, some from people I know.

2. No one is total geek or nerd, there must be something you do besides computing. I do swimming and some basketballing, it refreshes me most of the time when my brain is nearly dead. By the way if you find yourself hating geeks so much, please love yourself. Popular quote from my friend - "You hate what you are!"

3. I ain't vampire, I do sleep, but when? I can't even tell myself, be it.

4. I love no war.

5. .....

Unfortunately, I don't do tag.

Somehow people don't know me enough, 5 things I need to clarify here -

1. I'm neither from military or education lines, either me or my teachers totally suck when I was in school. In fact I'm self-learner, I learn most of the things from google and yes, some from people I know.

2. No one is total geek or nerd, there must be something you do besides computing. I do swimming and some basketballing, it refreshes me most of the time when my brain is nearly dead. By the way if you find yourself hating geeks so much, please love yourself. Popular quote from my friend - "You hate what you are!"

3. I ain't vampire, I do sleep, but when? I can't even tell myself, be it.

4. I love no war.

5. .....

Unfortunately, I don't do tag.

Cheers ;]

Thursday, January 04, 2007

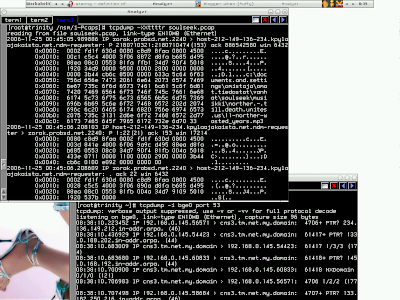

Offline pcap analysis?

Now you are staring at your screen looking for culprits after downloading the logged pcap files. You are considered performing offline pcap analysis as you don't make contacts to the network while doing it but I'm sured most of you will need internet connection to acquire necessary data sometimes so you will still leave your internet connection on.

Most people do not know that they are actually generating network traffic when they performing analysis on pcap files, and they are telling you they are doing it offline. But this is totally not right. Take a look at the screenshot below.

Most people do not know that they are actually generating network traffic when they performing analysis on pcap files, and they are telling you they are doing it offline. But this is totally not right. Take a look at the screenshot below.

I'm running two virtual terminal, the first shows I'm running -

shell>tcpdump -XXttttr soulseek.pcap

At the same time I monitor my network interface in second virtual terminal -

shell>tcpdump -i bge0 port 53

If you look at the second one, apparently there are dns traffics ongoing. Yes, I'm using tcpdump, but most of network analysis tools will try to resolve the host address or port when possible if you are not telling them not to do it. Now you still consider performing offline analysis, I bet no. To actualy do it, you will have to run tcpdump with -n option, by doing that you don't convert host address and port number to name anymore.

shell>tcpdump -XXttttnr soulseek.pcap

Now you will see no network traffics generated, this is the real offline pcap analysis. And guess what, you gain extra speeds when analyzing large pcap file since you don't try to resolve the host addresses or ports(refer /etc/services file) anymore.

Remember this is not only applied to tcpdump but many other network analysis tools(argus, wireshark and so forth).

Enjoy (;])

Remember this is not only applied to tcpdump but many other network analysis tools(argus, wireshark and so forth).

Enjoy (;])

P/S: On linux, specify -n alone will prevent from doing dns lookup, however it will still tries to convert the port number to name, you will have to run -nn to avoid resolving anything.

Subscribe to:

Posts (Atom)